For months, MrConsumer’s email box has been flooded with emails from Groupon.

Groupon sells discount certificates to various restaurants and local service establishments. I usually buy my twice-a-year oil changes through Groupon for my car repair shop. The price unfortunately has crept up, so I did not buy it from them in November for my December servicing.

That did not stop Groupon, or perhaps even encouraged it, to literally deluge me with emails — often multiple emails an hour for oil changes and more.

*MOUSE PRINT:

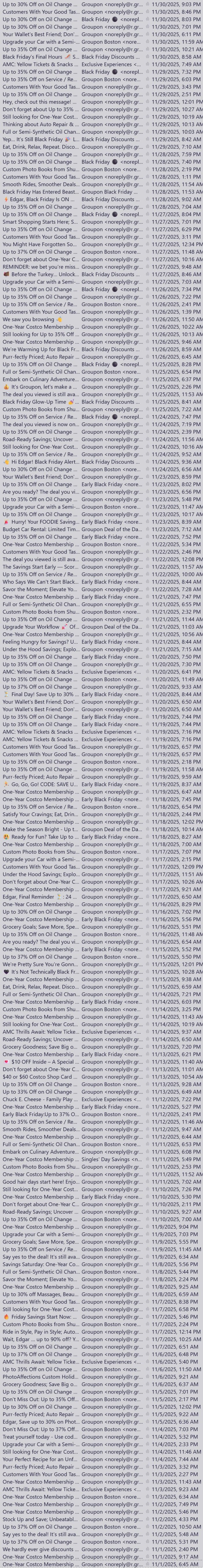

Here is a list of the emails that Groupon sent to me this past November alone — 209 of them, if you can believe it.

Scroll down the list.

Sometimes they are minutes apart, and up to a dozen a day. And emails from Groupon in December was even worse — 313 in total!

Congress passed the CAN-SPAM law in 2003. Contrary to popular belief, it does not ban unsolicited commercial email (spam) or limit how much an advertiser can send you. What it does is set up various requirements including having to have a simple way for the recipient to opt-out of getting more such emails from that advertiser.

*MOUSE PRINT:

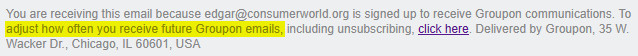

Groupon goes a step further and says they allow you to adjust how often you hear from them.

Great… but there is no such option when you click their link.

*MOUSE PRINT:

I don’t want to opt-out altogether because I want to know of a great deal on oil changes at my repair shop or membership discounts at Costco, but I don’t want minute-to-minute updates.

So, we asked the PR folks at Groupon why they send so many emails a day to customers, and whether they honestly believe that up to a dozen emails a day is appropriate. We also wanted to know what happened to their promised option to reduce the frequency of emails.

The company’s customer service department responded, and in a moment of candor said:

We sincerely apologize for the volume of emails you have been receiving and for the frustration this has caused.

You are absolutely right that receiving numerous emails in a single day is not a positive customer experience. While we intend to share relevant offers, it is clear that in this case, our frequency did not align with your preferences, and we take responsibility for that.

We would like to inform you that you have the option to limit the number of emails you receive from us on a daily basis.

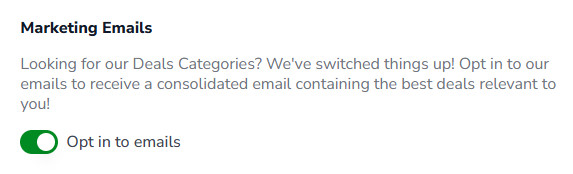

In fact, the option to limit the number of emails is an all or nothing option rather than providing the ability to select a number.

So to end Groupon’s seeming inability to sensibly limit the number of emails it sends to customers, I opted out on January 1.

This whole experience suggests that the federal law needs to be amended to ban excessive emailing to consumers. What do you think?